An overview about the usage of Docker containers like benefits, the build process via the Dockerfile, managing persistent data, docker-compose, etc.

ETR: 25 min

Introduction

Containerization is a technique to encapsulate software installation and execution into a so called container.

The container is based on a pre-installed operating system like Ubuntu, CentOS, Alpine, etc. and provides its own file system. On this base, required RPM packages, software packages, configuration files can be installed into the container. Such installation steps are automated by a build script named Dockerfile. Manually performed installation steps are not intended as favoured by the agile development process. The result of the build script is a named Docker image which can be stored in a binary repository. This binary repository is the base for automatic rollouts of this image onto e.g. virtual machines.

Such an image can be started as container. Each container got its own file system and each process launched inside a container can not exceed this boundary, even the process is launched as root.

Image Build Process / Dockerfile

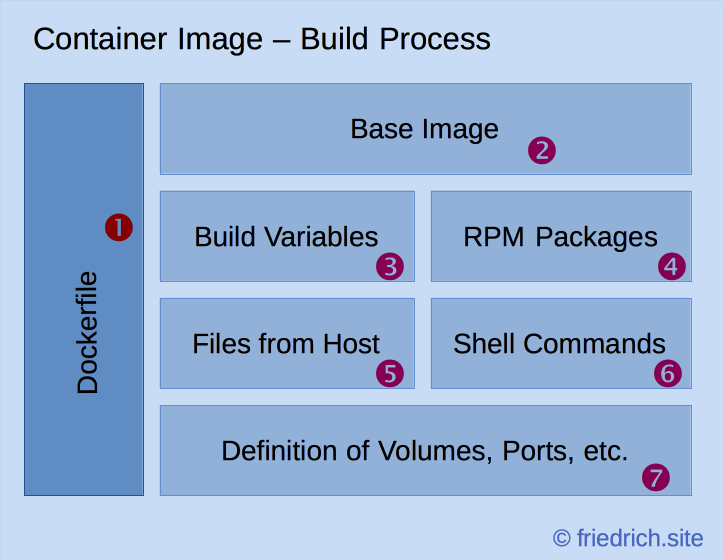

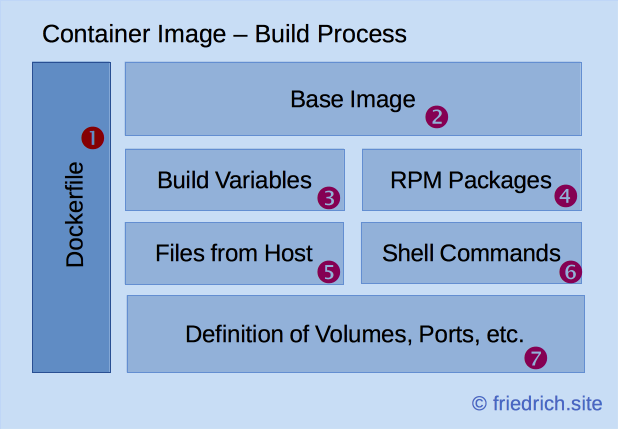

New container images can be build via build script, the Dockerfile ![]() .

.

The Dockerfile consists briefly of the following items:

- A base image

which is either a operating system like Ubuntu, CentOS, Alpine, etc. or another custom image.

which is either a operating system like Ubuntu, CentOS, Alpine, etc. or another custom image. - Build variables

which can be used to parametrize the build process like for e.g. URLs, version numbers for software to be installed, user names.

which can be used to parametrize the build process like for e.g. URLs, version numbers for software to be installed, user names. - If the base image is a operating system, this operating system has only minimal packages installed. This base image has then to be enriched by installing further RPM packages

even for basic commands like “sudo”, “curl”, “wget”. This paradigm keeps your container small and comprehensible.

even for basic commands like “sudo”, “curl”, “wget”. This paradigm keeps your container small and comprehensible. - With the Dockerfile, files

can be copied from the host/build system into the container like configuration files and shell scripts.

can be copied from the host/build system into the container like configuration files and shell scripts. - Shell commands

can be executed inside the container like the following:

can be executed inside the container like the following:

- Creating operating system users,

- downloading and installing software packages,

- creating or modifying files,

- adjusting file permission.

- Definition

of e.g. the TCP/IP ports to be exposed or volume mount points available.

of e.g. the TCP/IP ports to be exposed or volume mount points available.

With the Dockerfile, the build process is automated by this Dockerfile and by this all installation steps are (at least technically) documented.

Docker-Compose

docker-compose is a command line tool for Docker which acts as wrapper for the Docker shell command.

For building containers and launching containers, you might need a certain amount of command line parameters for e.g. the following parameters:

- The resulting image name (build process).

- The image name to launch (launch process).

- The ports to map (launch process).

- The volumes to mount (launch process).

Specifying all these parameters requires either a lot manual work for typing in or custom shell scripts.

To standardize this process, the docker-compose tool comes into play. All parameters which are intercepted by the Docker shell command via the command line can be defined inside the YAML-based configuration file named docker-compose.yml.

Furthermore a launch cluster consisting of multiple containers can be defined.

Persistent Data

Data to persist are recommended to split from pure software installations.

So, software installations go into a software container. Data goes into a separate data container or onto a mounted volume (Docker, NFS, etc.).

With this approach,

- a proper installed software container can be reset to its image without loosing any data,

- after each restart (with combined reset) all (wanted and unwanted) modifications are discarded,

- so each start is based on a clean image,

- corrupted installations caused by software bugs are avoided and

- fears of resetting the container or having undocumented changes are minimized.

Host System

- The host operating system should be optimized for hosting Docker containers like CoreOS or Atomic which provides e.g. following features:

- Slim and focused on Docker containers.

- Additional services for service discovery, clustering, establishing private LANs between containers belonging to the same service group, etc.

- Operating system updates are performed in a git-like manner, so recent updates can be safely rolled back. The side effect of this is, that such operating systems have no RPM package manager any more.

- No software should be installed on the operating system (maybe beside of docker-compose).

- However, this is already rarely possible because, as mentioned above, using container-specialized operating systems don’t have a RPM package manager on board. So finding software supported by these operating system is difficult (and not intended).

- The host system should be kept slim. Each software (and its configurations) should be put inside a container. With this approach,

- the containers can be spread and re-used easily,

- the host system can easily be re-created,

- there is no need to backup a whole system/VM, only data containers / stores have to be backed up.

Prospects: Orchestration Tools

Orchestration tools like Kubernetes or CloudFoundry automate processes like

- Service discovery,

- forming and managing container/service clusters (pods),

- setup of new nodes (VMs based e.g. on CoreOS or Atomic) on demand.

Happy containerization!